Unit-1

Introduction: Algorithms, Analyzing Algorithms, Complexity of Algorithms, Growth of Functions, Performance Measurements, Sorting and Order Statistics - Shell Sort, Quick Sort, Merge Sort, Heap Sort, Comparison of Sorting Algorithms, Sorting in Linear Time.

Algorithms -

An algorithm can be defined as a finite set of steps, which has to be followed while carrying out a particular problem. It is nothing but a process of executing actions step by step.

An algorithm is a distinct computational procedure that takes input as a set of values and results in the output as a set of values by solving the problem.

Characteristics of Algorithms

- Input: It should externally supply zero or more quantities.

- Output: It results in at least one quantity.

- Definiteness: Each instruction should be clear and ambiguous.

- Finiteness: An algorithm should terminate after executing a finite number of steps.

- Effectiveness: Every instruction should be fundamental to be carried out, in principle, by a person using only pen and paper.

- Feasible: It must be feasible enough to produce each instruction.

- Flexibility: It must be flexible enough to carry out desired changes with no efforts.

- Efficient: The term efficiency is measured in terms of time and space required by an algorithm to implement. Thus, an algorithm must ensure that it takes little time and less memory space meeting the acceptable limit of development time.

- Independent: An algorithm must be language independent, which means that it should mainly focus on the input and the procedure required to derive the output instead of depending upon the language.

Advantages of an Algorithm

Disadvantages of an Algorithm

- Developing algorithms for complex problems would be time-consuming and difficult to understand.

- It is a challenging task to understand complex logic through algorithms.

Analysis of algorithm

- Space Complexity: The space complexity can be understood as the amount of space required by an algorithm to run to completion.

- Time Complexity: Time complexity is a function of input size n that refers to the amount of time needed by an algorithm to run to completion.

Complexity of Algorithm

Typical Complexities of an Algorithm

Growth of function(Asymptotic Analysis of algorithms)

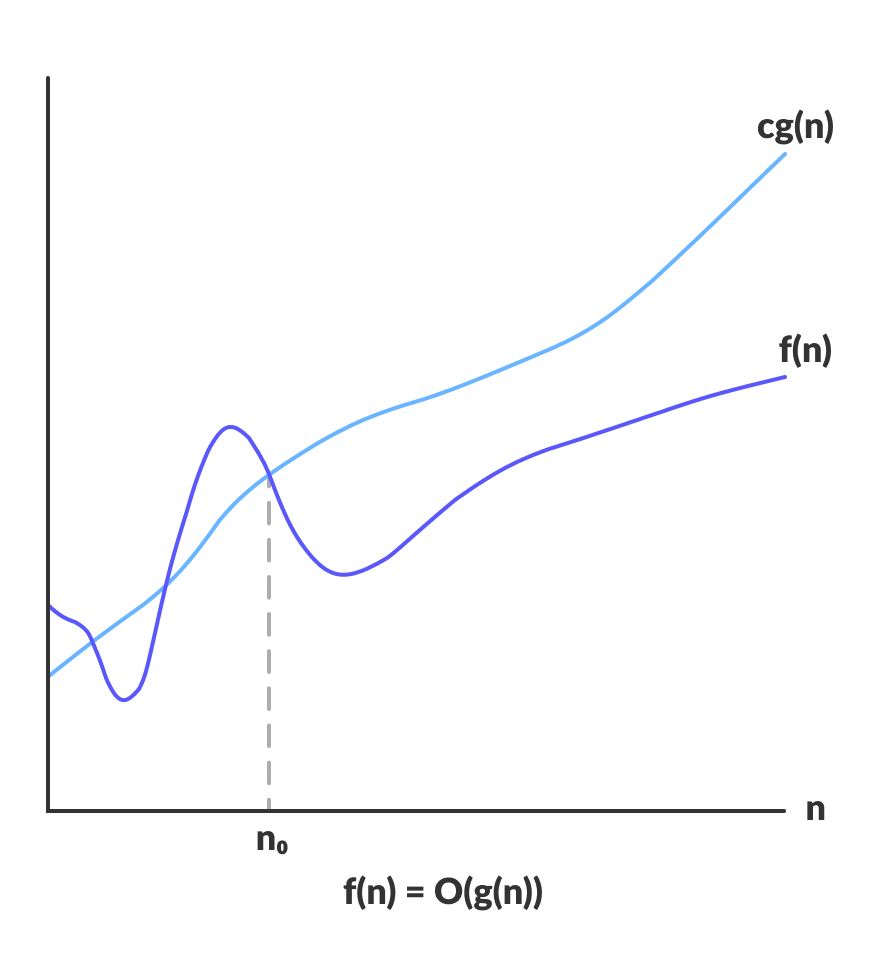

Big-O Notation (O-notation)

O(g(n)) = { f(n): there exist positive constants c and n0

such that 0 ≤ f(n) ≤ cg(n) for all n ≥ n0 }The above expression can be described as a function f(n) belongs to the set O(g(n)) if there exists a positive constant c such that it lies between 0 and cg(n), for sufficiently large n.

For any value of n, the running time of an algorithm does not cross the time provided by O(g(n)).

Since it gives the worst-case running time of an algorithm, it is widely used to analyze an algorithm as we are always interested in the worst-case scenario.

O(g(n)) = { f(n): there exist positive constants c and n0 such that 0 ≤ f(n) ≤ cg(n) for all n ≥ n0 }The above expression can be described as a function

f(n)belongs to the setO(g(n))if there exists a positive constantcsuch that it lies between0andcg(n), for sufficiently largen.For any value of

n, the running time of an algorithm does not cross the time provided byO(g(n)).Since it gives the worst-case running time of an algorithm, it is widely used to analyze an algorithm as we are always interested in the worst-case scenario.

Omega Notation (Ω-notation)

Omega notation represents the lower bound of the running time of an algorithm. Thus, it provides the best case complexity of an algorithm.

Ω(g(n)) = { f(n): there exist positive constants c and n0 such that 0 ≤ cg(n) ≤ f(n) for all n ≥ n0 }The above expression can be described as a function

f(n)belongs to the setΩ(g(n))if there exists a positive constantcsuch that it lies abovecg(n), for sufficiently largen.For any value of

n, the minimum time required by the algorithm is given by OmegaΩ(g(n)).Theta Notation (Θ-notation)

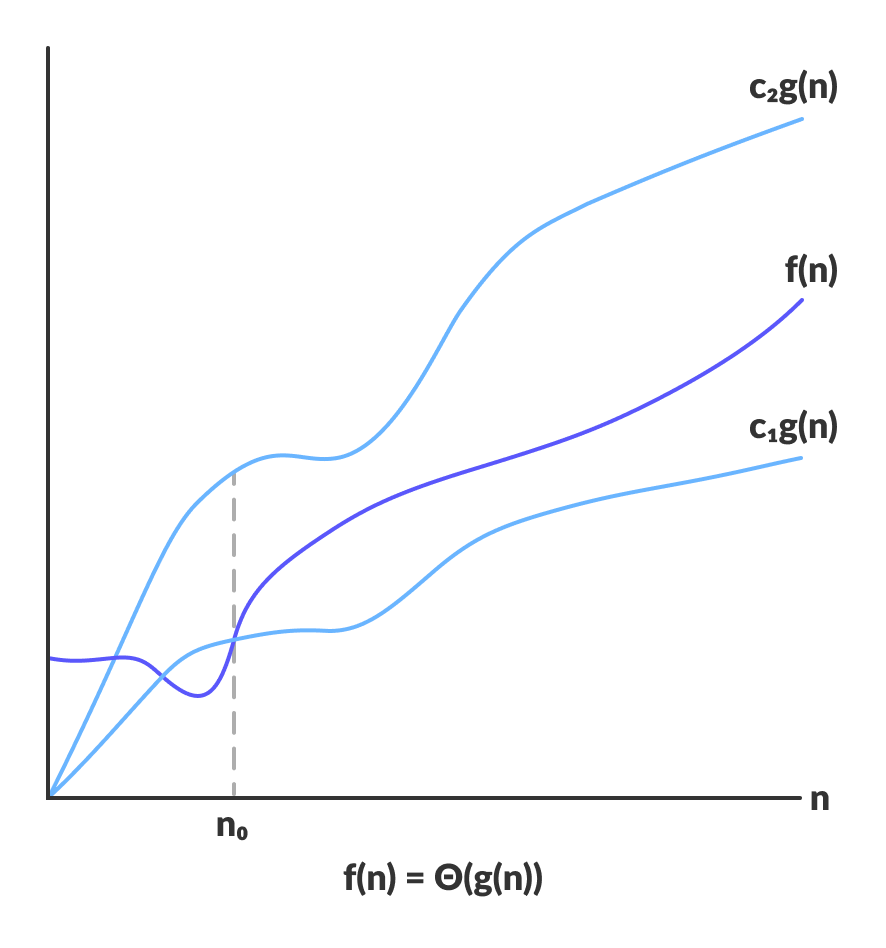

Theta notation encloses the function from above and below. Since it represents the upper and the lower bound of the running time of an algorithm, it is used for analyzing the average-case complexity of an algorithm.

For a function

g(n),Θ(g(n))is given by the relation:Θ(g(n)) = { f(n): there exist positive constants c1, c2 and n0 such that 0 ≤ c1g(n) ≤ f(n) ≤ c2g(n) for all n ≥ n0 }The above expression can be described as a function

f(n)belongs to the setΘ(g(n))if there exist positive constantsc1andc2such that it can be sandwiched betweenc1g(n)andc2g(n), for sufficiently large n.If a function

f(n)lies anywhere in betweenc1g(n)andc2g(n)for alln ≥ n0, thenf(n)is said to be asymptotically tight bound.Why is Asymptotic Notation Important?

1. They give simple characteristics of an algorithm's efficiency.

2. They allow the comparisons of the performances of various algorithms.

Sorting and Order Statistics :

Shell Sort in Hindi

Shell Sort बहुत ही ज्यादा efficient (कुशल) sorting एल्गोरिथम है और यह insertion sort एल्गोरिथम पर आधारित है. इस algorithm में सबसे पहले उन elements को sort किया जाता है जो एक दूसरे से बहुत दूरी में होते हैं.

इसमें अगर कोई छोटा element दूर दाई ओर (far right side) में है तो उसे far left में लाया जाता है. यह अल्गोरिथ्म बहुत दूरी के elements में insertion sort का प्रयोग करती है. सबसे पहले ज्यादा दूरी के elements को sort किया जाता है फिर इसके बाद कम दूरी के elements को sort किया जाता है. इस दूरी को interval कहा जाता है और इस interval को कैलकुलेट करने के लिए हम निम्नलिखित formula का प्रयोग करते हैं.

Formula :-

interval = array length/ 2

interval को निकालने के लिए हम array की length को 2 से divide कर देंगे.Complexity –

- Worst case complexity – O(n2)

- Best case complexity – O(n*log n)

- Average case complexity – O(n*log n)

- Space complexity – O(1)

Thanks :)

ReplyDelete